Routing Using HAProxy

Imagine you have a project that creates task list for a logged in user. I can think of two entities:- User

- Task

- User Service

- Task Service

GET http://<your-machine>:8081/tasks/get

GET http://<your-machine>:8081/tasks/get/{id}

POST http://<your-machine>:8081/tasks/ + payload

PUT http://<your-machine>:8081/tasks/ + payload

...and so on. Microservice:2 hosts the User Service.GET http://<your-machine>:8082/users/get

GET http://<your-machine>:8082/users/get/{id}

POST http://<your-machine>:8082/users/ + payload

PUT http://<your-machine>:8082/users/ + payload

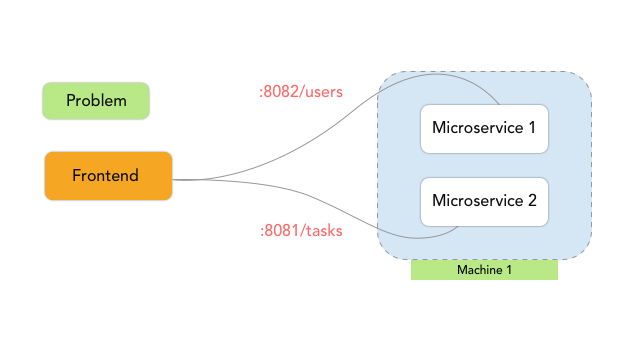

...and so on. The problem here is, the API exposes a few things to the frontend. You are running microservices on 8081 and 8082. Thus frontend should be aware of these ports. As you go on adding more microservices, you will need to open up as many ports. For example, if there are 25 microservices, then you need to open up 25 ports! In short, this is a routing problem. We can get around this using a load balancer HAProxy. Here’s how:- Install HAProxy, where you host your microservices.

sudo add-apt-repository -y ppa:vbernat/haproxy-1.5 sudo apt-get update sudo apt-get install -y haproxy

- Post installation open /etc/haproxy/haproxy.cfg for editing.

#Define the accesscontrol list and its rules.

frontend localnodes

#Bind the rules,only to request coming from 9000 port.

bind *:9000

#Applicable to only http request.

mode http

#Bind url_tasks to all request having signature /tasks.

acl url_tasks path_beg /tasks

#Bind url_tasks to all request having signature /users.

acl url_users path_beg /users

use_backend tasks-backend if url_tasks

use_backend user-backend if url_users

backend tasks-backend

mode http

balance roundrobin

option forwardfor

#forward all request having signature /tasks to microservice running on localhost 8081

server web01 127.0.0.1:8081 check

backend user-backend

mode http

balance roundrobin

option forwardfor

#forward all request having signature /tasks to microservice running on localhost 8081

server web01 127.0.0.1:8082 check- After making the configuration change, restart the HAProxy service:

sudo service haproxy restart

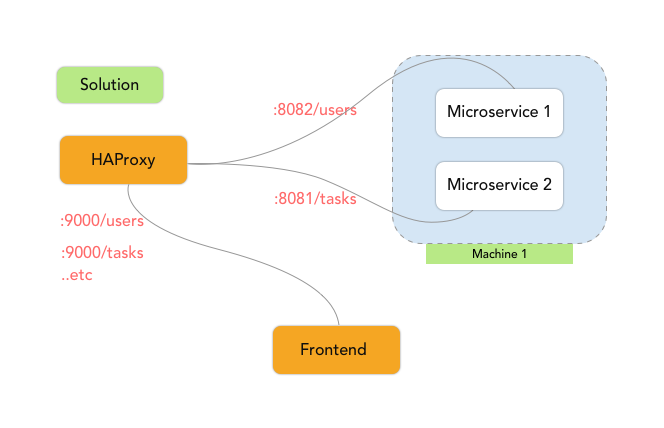

Here is a pictorial view of the problem and the proposed solution using HAProxy. All the ports are open for frontend to make API calls as below: After using HAProxy:

After using HAProxy:

Advantages of Using HAProxy

- Frontend does not need to know which services are running at which port.

- Only a single port needs to be open. Frontend will hit that port with the signature. HAProxy will forward it to the correct microservice using the mapping written in the configuration.

Load Balancing Using HAProxy

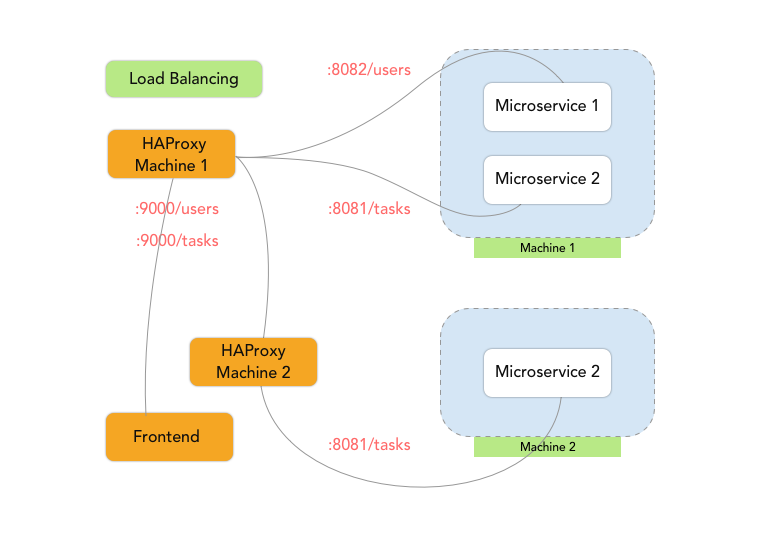

Imagine you have a high number of requests coming to Microservice:1 {Task Service}. Due to the number of requests, you have to do some load balancing.- Spawn up a new server to host Microservice:1 {Task Service} on Machine 2. Now we have something like this:

Machine 1

Hosts

Microservice:1 {Task Service} runs on port 8081

Microservice:2 {User Service} runs on port 8082

Machine 2

Microservice:1 {Task Service} runs on port 8081

- Install HAProxy on Machine 2.

- Open /etc/haproxy/haproxy.cfg for editing.

- Add routing configuration as below:

#Define the accesscontrol list and its rules.

frontend localnodes

#Bind the rules,only to request coming from 9000 port.

bind *:9000

#Applicable to only http request.

mode http

#Bind url_tasks to all request having signature /tasks.

acl url_tasks path_beg /tasks

use_backend tasks-backend if url_tasks

backend tasks-backend

mode http

balance roundrobin

option forwardfor

#forward all request having signature /tasks to microservice running on localhost 8081

server web01 localhost:8081 check- On Machine 1, add routing configuration as below to map it with the newly added microservice on Machine 2:

backend tasks-backend

mode http

balance roundrobin

option forwardfor

#forward all request having signature /tasks to microservice running on localhost 8081 or on machine 2 in round robin

server web01 127.0.0.1:8081 check

server web02 MACHINE_2_IP:9000 check A pictorial view of load balancing using HAProxy[/caption]

A pictorial view of load balancing using HAProxy[/caption]

Advantages of Using HAProxy

- HAProxy provides high availability and scalability.

- Multiple load balancing algorithms are available.