Let’s start with the obvious question, what is a tokenizer? A tokenizer in Natural Language Processing (NLP) is a text preprocessing step where the text is split into tokens. Tokens can be sentences, words, or any other unit that makes up a text.

Every NLP package has a word tokenizer implemented in it. But there is a certain challenge associated with Malayalam tokenization.

Malayalam is a highly agglutinative language like most Dravidian languages. Agglutinative languages are the ones in which you can combine words and derive a new word.

അവൻ ഇത് എന്താണ് പറയുന്ന അത്.

അവൻ ഇതെന്താണ് പറയുന്നത്.

Look at the Malayalam sentences above. The agglutinative nature of Malayalam lets us derive the second sentence from the first. In English and other non-agglutinative languages, the process of word tokenization is less challenging because word tokens are separated by spaces or punctuations. Even though just splitting with spaces and punctuations will not properly tokenize an English sentence, exceptions to this rule can still be captured with rule engines.

If we apply the word tokenization rules for English here, then our vocabulary will have ‘ഇത്’, ‘എന്താണ്’ and ‘ഇതെന്താണ്’. This will drastically increase the size of the vocabulary. What effect will an increased size of the vocabulary have? The first problem is that other processes in the NLP pipeline like word embedding will become hard to do on such a large vocabulary. Another problem is that this approach will cripple the NLP problem we are trying to solve. For example, in a sentiment analysis problem, the purpose of tokenization is to find tokens that mostly affect the decision and classify them. In Malayalam, a negative word like ‘ഇല്ല’ can be combined with a lot of other words. Capturing and classifying them all will result in the system becoming inefficient.

This is the point where we identified that word tokenization was not what we needed; we needed subword tokenization.

Solution for Subword Tokenization

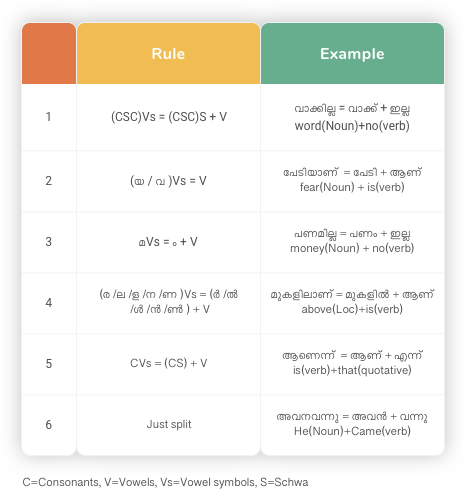

Exploring how these words combine to form new words led us to the combining rules of Indic languages, which are known as Sandhi rules. These are the rules which come into play when combining words. Further exploration into Malayalam Sandhi rules led us to a research paper A Sandhi Splitter for Malayalam by IIIT, Hyderabad. This paper analyzes the Sandhi rules in Malayalam.

Malayalam language elements are classified into Consonants, Vowels, Vowel symbols, and Schwa. Vowels are the Swaraksharam, Consonants are the Vyanjanaksharam, Vowel symbols are the Consonant sound modifiers, and Schwa is the letter ‘ഁ’ in Malayalam.

Creating a rule engine with these Sandhi rules is not a great choice. The problem with such an approach is that a word can be split in multiple ways using this rule and choosing the correct one will need a dictionary search.

Our next approach was to let a model learn the patterns in Sandhi splitting and use that model to predict the subwords of a given word. The first challenge of such a task is finding the dataset required for training a model. Our search for such a dataset ended up in us creating one. Our idea was to use the Sandhi rules to join words from a dictionary and to search a corpus to confirm whether the new word exists in it.

We used Malayalam Wiktionary and combined the words using the Sandhi rules. We had a corpus of Malayalam news articles collected through web scraping. This data generation process resulted in the creation of around 2,00,000 Sandhi split words. This dataset is publicly available here.

The next step was to identify an architecture for our model. We researched into the current implementation of such a model and found a paper by IBM Research, Sanskrit Sandhi Splitting using seq2(seq)2 . This paper discusses the implementation of a sequence-to-sequence model for Sanskrit Sandhi splitting.

The architecture in the paper was an encoder double-decoder architecture. The encoder was a deep Bi-LSTM and the decoder was a two-layer LSTM with a global attention layer. The process of training is split into two. In the first phase, the encoder, attention layer, and first decoder are trained. In the second phase, the first decoder is frozen, and the second decoder is trained by allowing the attention layer and encoder to be fine-tuned. The first decoder (location decoder) identifies the split location of the given word and the second decoder (character decoder) predicts the actual sub words.

For example, if the input is the word ‘ഇതെന്താണ്’ then the location decoder predicts ‘001000000’ and the character decoder predicts ‘ഇത്+എന്താണ് ‘. The ‘1’ in the location decoder output identifies the split location to be between third and fourth characters.

In the first phase of training, the attention layer identifies the split location, and this information is used in the second phase to predict the subwords.

Implementation of Subword Tokenization

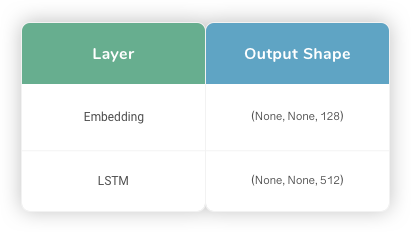

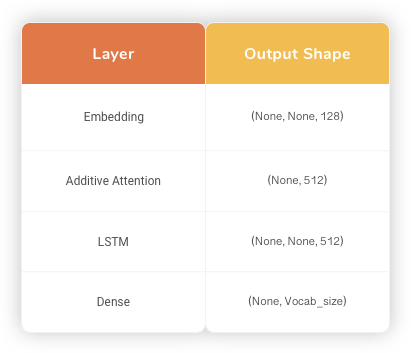

We implemented the architecture in Tensorflow 2.0 and trained the model on our data. After experimenting with different hyperparameters, we got the best result with the architecture given below.

We used single-layer LSTM units with 512 hidden units whereas the researchers used two-layer Bi-LSMT units with 512 hidden units. Our embedding dimension was 128, which was the same as that of the research study.

We used Adam as the optimizer and Sparse Categorical Cross-entropy as the loss function. We split 20% of our dataset to test data. We measured the performance of our model based on the percentage of exact match outputs. The best result we achieved so far is a 78% exact match in test data.

Custom Architecture for Subword Tokenization

We parallelly trained a custom architecture in which we used two sequence-to-sequence models serially. The first model resembles the location decoder. The second model uses the same architecture but changes the dataset. We identified the split location from the first model and in the input of the second model, the character at the split location was changed to character + 150th character in Unicode. This was done to pass the first decoder output to the second model. The second model then resembles the character decoder and predicts the subwords.

The hyperparameters of this architecture were changed. The embedding dimension used was 256 and the hidden unit size of LSTM was 1024. The rest of the network was kept the same as the double decoder model suggested in the IBM research paper. The first model in this architecture achieved an accuracy of 81% and the second model achieved an accuracy of 92.8%. Connecting this model end to end achieved an accuracy of 76%.

Conclusion

Currently, the double decoder model has superior performance over our custom architecture. A manual evaluation of a small percentage of random words in our dataset shows that the data generation process had faults and around 20% of our dataset contains wrong splits. We believe a clean dataset will further improve the accuracy of this model. The models and code of these networks are published here.

The subword tokenizer solves the problem of tokenization for the Malayalam language. This procedure can be used for other Indic languages as well to solve the problem of tokenization.