Voice assistants are becoming mainstream in the app world, particularly e-commerce apps. Imagine shopping by just talking to an app. That’s how easy it is going to be.

The search feature in e-commerce apps is far from perfect—spelling and transliteration errors, poor search suggestions, etc., can mar the shopping experience, leading to customer dissatisfaction and cart abandonment. Voice-powered search can alleviate these challenges, especially when it allows users to search in their own language naturally. People unfamiliar (or plain uncomfortable) with complex app navigation can kick back and enjoy their shopping. All in all, voice commerce could be the next big thing to bring over a billion users to e-commerce.

How Voice Is Utilized in E-Commerce Apps

There are many ways e-commerce apps can leverage voice assistants:

- Voice Search: Perform a search based on the user's vocal instructions and take the user to the page with the search results. For example: “Search for shirts.” Voice search can support multiple filters in a single voice input. For example: “Show me blue jeans size 32.” or “Search for shirts with prices less than Rs. 2000.”

- Voice Navigation: Using voice, users can navigate to almost any page. For example: “Show my cart.” or “Go to homepage.”

- Voice Action: Users can perform certain types of action using voice commands. For example: “Add item to the cart.”

Voice Interaction Stages

Interaction in a voice-enabled app happens in three stages:

- Listening Mode

On an iPhone, while the user is speaking, a red circle expands from the mic button. This means that the device is in listening mode. When the user speaks out the voice command, the spoken text appears below the mic button in real time.

- Voice Processing Mode

After five seconds of recording, the voice assistant stops recording and starts processing the text (converted from voice) with the help of Core ML models and Natural Language frameworks.

- Output Mode

The voice assistant performs actions based on the command and speaks out the response.

How Voice Search Works in iOS

In iPhone, Siri shortcuts can be activated by saying predefined Siri invocation phrases (“Hey Siri, shopping time!”). These invocation phrases are customizable so users can define phrases that are easy to remember. Siri shortcuts support validations over users’ inputs.

Consider the following example in which the voice assistant asks for quantity.

Voice assistant: “Select a the quantity ”

User: “Zero”

Voice assistant: “Quantity can’t be lower than one.”

Sample Application

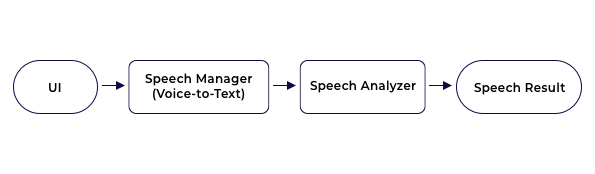

The voice assistant system is implemented separately so it can be integrated with any existing e-commerce application without much effort. The following flowchart shows the basic components of the voice assistant feature in our demo app.

The voice assistance framework mainly consists of three modules:

Speech UI: This is the user interface (UI) part of the voice assistant. Here, we define the conversation structure between the assistant and the user for that particular application. Speech UI analyzes the user input and generates contextual responses.

Speech Manager: This module takes care of voice-to-text conversion. It records the voice for five seconds and converts the voice to text. I've used the SFSpeechRecognizer framework for this.

Speech Analyzer: This is the most important part of the framework. The purpose of this module is to analyze the text coming from the Speech Manager module and generate meaning from user speech. Some of the frameworks used are Apple’s Natural Language, Create ML, and Google’s Natural Language API.

The result generated by the Speech Analyzer contains the command and various attributes identified from the spoken phrase.

For example: "Search for shirts under 2000."

The Speech Analyzer will convert the spoken phrase into a format the machine can understand:

{

command: search

attributes: ["name": "shirts",

"filterBy": "under",

"filterPrice": 2000]

}

The speech analyzer module is also responsible for generating appropriate voice responses as defined in Speech UI.

Limitations of Current Voice Assistants

- Language Support

Support for many regional languages is still absent. Even if a language is supported, machines haven’t advanced enough to understand individual accents and intonations.

- Restricted Engagement

When it comes to products like apparel and other fashion accessories, visuals matter a lot. Product pictures and videos influence users' purchases. With voice-only interaction, this crucial influence is lost.

- Privacy and security concerns

Privacy is a major concern when using voice commands, especially when it comes to making payments online.

- Specific queries for specific products

Shopping with a voice-enabled device means your customers will not be able to scroll through your products before making a purchase. In this kind of situation, upselling and cross-selling products can be a challenge.

The Way Forward for Voice Commerce

Flipkart and Amazon are among the retail giants who have rolled out voice commerce in their apps. While Amazon supports voice search and navigation, support for regional languages is not there yet. In India, JioMart is another e-commerce venture that has voice assistants integrated with its app. Voice-enabled apps have a long way to go in terms of supporting diverse languages and making interactions more human. But I bet voice commerce will be a lot more sophisticated in the coming years as the underlying technologies are refined.