You’ve created your application in Kubernetes. You’ve got the pods up and running. And you're ready to unveil your brilliant application. But how do your end users connect?

The simplest way would be to create a LoadBalancer service and attach it to the service that needs to be exposed externally.

Another option would be to set up a NodePort service and then set up a LoadBalancer in front of it. In the LoadBalancer, you would manually create a routing rule to forward all your traffic to the NodePort.

But what if you have more than one endpoint to expose? If you went the first route, you would end up having to pay for all the new LoadBalancers. If you took the second option, you need to start maintaining all the new routing rules manually.

Or, you could create an ingress controller.

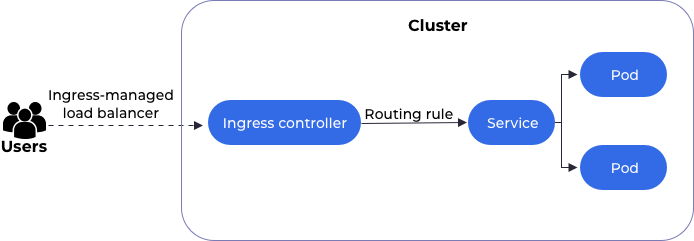

An ingress controller routes traffic from an external source to your pods/application running inside a Kubernetes cluster based on variables like domain, URL path, etc. It abstracts the complexity of application routing.

Components of a K8s Ingress Controller

An ingress controller has two basic components.

1. Kubernetes Ingress Resource

This is a native Kubernetes resource. It’s where you store a mapping of your external DNS to the internal Kubernetes service name. You can also add more complex routing rules here, like say, when you want to send traffic for /payment to one service, /api to another, etc.

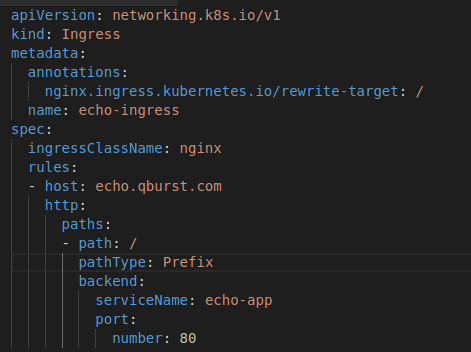

For example, the below ingress definition maps all requests to echo.qburst.com to my service named echo-app.

In this way, the ingress controller allows you to write a simple yaml file to define the application routing. This will be more or less the same, whichever controller you end up choosing.

2. Kubernetes Ingress Controller

This is NOT a native Kubernetes resource. You must set up the controller with a LoadBalancer in front of it. The controller pulls the latest rules from the ingress resources and generates a configuration. All external traffic that is directed to the Load Balancer proceeds to the ingress controller. The ingress controller redirects traffic based on its configurations to the correct service.

Best practices for Designing K8s Ingress Controller

It is pretty easy to set up and get started on an ingress controller by following a tutorial. And that can be a problem because you wouldn't give it much thought until something breaks. There are various factors that go into the design of a successful system and each decision has to be carefully weighed.

Here are some of the practices I follow while designing an ingress controller.

- Choose the controller based on the requirement

There are a bunch of controllers (with two forms of Nginx!). So it’s important to understand what features you want in the controller and choose accordingly. Some of the important factors you should consider are:

- Traffic Protocol - What kind of traffic are you routing? HTTP(s)? Do you need Websockets, HTTP2, or WebSockets? TCP/UDP?

- Traffic control - Are you looking for any specific control on your traffic? Do you need rate limiting? Do you want to implement retries on the traffic? Do you need circuit breakers to prevent error loops?

- Traffic splitting - Do you need advanced traffic rules, like supporting canary deployments or A/B testing?

- Monitoring - Do you need to integrate with existing log centralization and metrics collection systems?

- Vendor lock-in - Do you anticipate moving from AWS to GCP down the line?

- Extensibility - If you don't know most of the requirements in advance, you should select a more flexible ingress controller that allows you to add custom plugins later.

- Set up a separate ingress controller for tools (preferably internal)

In addition to your applications, you will have a host of tools to help maintain your cluster, like monitoring tools (Prometheus), log centralization (EFK), and deployment (Argo CD). You might also have your own internal admin tools. Ideally, you should place all such tools on a separate ingress controller—if possible, on an internal-only load balancer—and limit the IPs that can access it. This secures the solution and also ensures traffic to the same does not interfere with your main applications’ traffic.

- Manage SSL for ingress endpoints

You should enable SSL on your ingress controller’s load balancer so that your data transmission is encrypted. By default, TLS is terminated at the ingress. So traffic from the controller to the pod will be decrypted. If you want full SSL, though, you should configure your controller and make the relevant changes to your application.

- Add separate controllers for different applications

As the number of applications increases, you could run into network bottlenecks if everything is routed through a single controller. When you add more ingress rules, you are basically adding more configurations to the controller. This could also lead to performance degradation from some controllers, depending on the number and type of rules. Ideally, I group the applications based on their purpose/organization and use separate controllers accordingly.

- Enable HPA for ingress controller

Creating a Horizontal Pod Autoscaler (HPA) means the required number of pods will automatically scale out and scale in based on a metric (usually CPU utilization). And while it's a best practice to enable HPA on your applications, most people miss setting it up on their ingress controller. Your ingress controller is the first point of entry and needs capacity planning as much or even more than all the other applications.

- Integrate WAF

Security is unfortunately an afterthought in most setups. But the minute you start getting DDOS’d, the next question is “How do I protect myself now?”. You should plan to integrate the Web Application Firewall (WAF) earlier rather than later. As to which WAF you should use, it would depend completely on your setup. It could be CloudFlare or Nginx’s mod-security or your cloud provider’s WAF.