Migrating legacy infrastructure to the cloud is not an easy feat.

However challenging the process, migration makes a lot of business sense, given the unmatched efficiency and scalability of the cloud.

This is the cloud adoption success story of one of our clients. The journey is explained in three parts, starting with this post, where we provide an overview of the challenges involved and the strategies adopted.

Let's dive in.

Why Azure?

Our client's infrastructure, spread across various self-owned data centers, struggled to keep up with the growing demands of the business. The strategic decision to migrate all the services to the cloud was taken to improve business agility and ensure resource allocation flexibility. Azure was the obvious choice since the enterprise relied heavily on Microsoft product suites. Our role as a cloud migration service provider was to support the entire migration journey, from initial assessment and planning to the final transition.

Discovery Phase

Any cloud migration has its own set of challenges. In our case, the most formidable one was that we had to complete the migration within a six-month window!

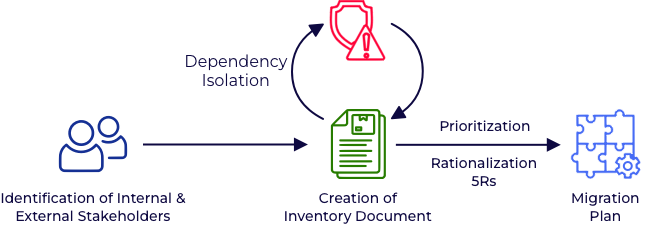

We began with a comprehensive assessment of the client’s current ecosystem. The diversity of services, each with its unique requirements, dependencies, and integration points, made our inventory assessment a daunting task. The lack of documentation added to our challenge.

We realized early on the importance of planning and documenting every step!

We identified hundreds of components, including business applications, databases, file shares, and virtual desktops. There were also servers that hosted domain controllers, certificate authority, BI and finance apps, monitoring, and security tools.

Next, we identified the key stakeholders in the company and mapped the services/components managed by them. For each service, we documented its host server, associated databases, file shares, and dependencies. We also organized extensive discovery sessions with the vendors engaged by the client for networking and backup services for a clear understanding of the existing IT stack.

Challenges for Migration

The client already had an Azure Entra ID tenant with a few unorganized subscriptions, and it was inadequate to handle the migration requirements.

Another potential hurdle was that the organization made limited use of Infrastructure as Code (IaC), so there was no well-defined IaC and automation framework. This added an additional task to the demanding timeline.

The Domain Name System (DNS) was spread across various platforms, including public domains, private domains hosted on-premise, and hybrid domains that were managed both on-premise and publicly. We needed a well-defined solution to handle the DNS and certificates for the in-house applications hosted publicly and privately.

The large volume of data to be migrated was also a concern. Being a major insurer, business continuity was a critical factor. The challenge for us was to define a migration strategy for the databases with minimal downtime and zero data loss.

Key Migration Strategies

- Infrastructure as Code: Adoption of Infrastructure as Code (IaC) to achieve faster deployment and better accountability was a key vision of the client. We chose Terraform as our IaC tool for its features, including the ready availability of a Microsoft-maintained module for landing zone deployment. To streamline deployment, we decided to develop a GitOps workflow, leveraging Terraform's capabilities.

- CAF-Azure Landing Zone: The Azure Landing Zone would help us to better organize Azure subscriptions and manage the accounts with much more control.

- Phased Migration: We opted for a phased approach to minimize the impact on business operations.

- Phase 1 - Create an IaC framework and deploy the Azure Landing Zone.

- Phase 2 - Containerize applications and migrate databases (MySQL and MSSQL).

- Phase 3 - Migrate on-premise virtual desktops.

- Compliance and Security: To enforce compliance and security best practices defined by the organization, we decided to enforce Azure policies.

- High availability and DR: Ensuring high availability of all critical resources was top priority. We also drew up a detailed disaster recovery plan for the resources.

Infrastructure as Code and GitOps Workflow

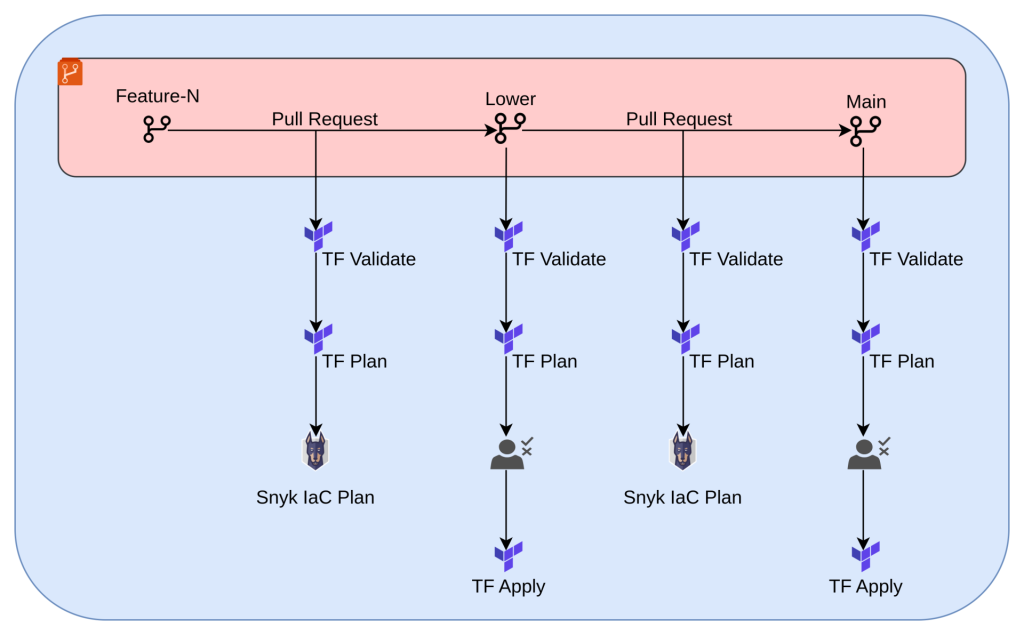

The challenge here was to design a GitOps workflow that meets the organization's compliance and security regulations. Utilizing Azure DevOps and Terraform, we developed a streamlined workflow (Figure 2). This workflow includes a branching strategy and deployment pipelines with approval gates to control the deployments. Plus, we set up Pull Request (PR)-based pipelines to check infrastructure changes before applying them.

Figure 2: GitOps workflow

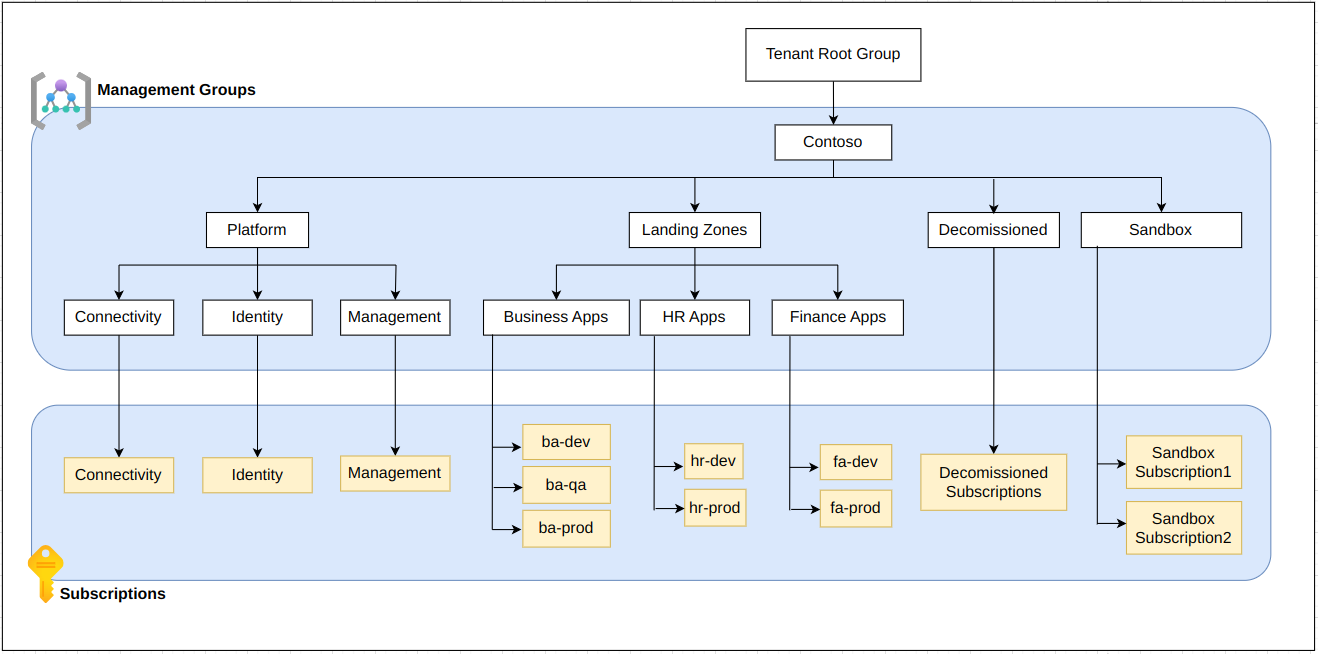

Landing Zone Architecture

Based on our analysis of the organizational structure, we designed a management group layout for the Landing Zone following the Microsoft Cloud Adoption Framework (CAF). To implement this architecture, we sourced the Microsoft-recommended “caf-enterprise-scale” Terraform module. This code was customized to meet all client-specific requirements and industry guidelines.

After the discovery phase, we already had a clear understanding of how to organize the subscriptions. Now, we had to come up with a fine-grained architecture for centralized connectivity, security, and management.

Connectivity Design

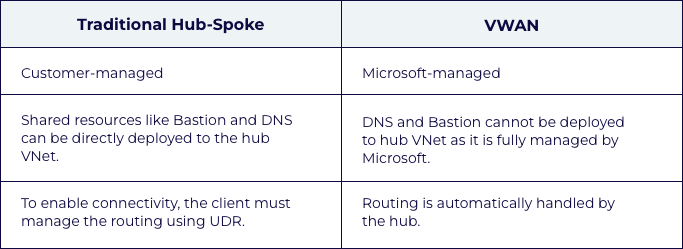

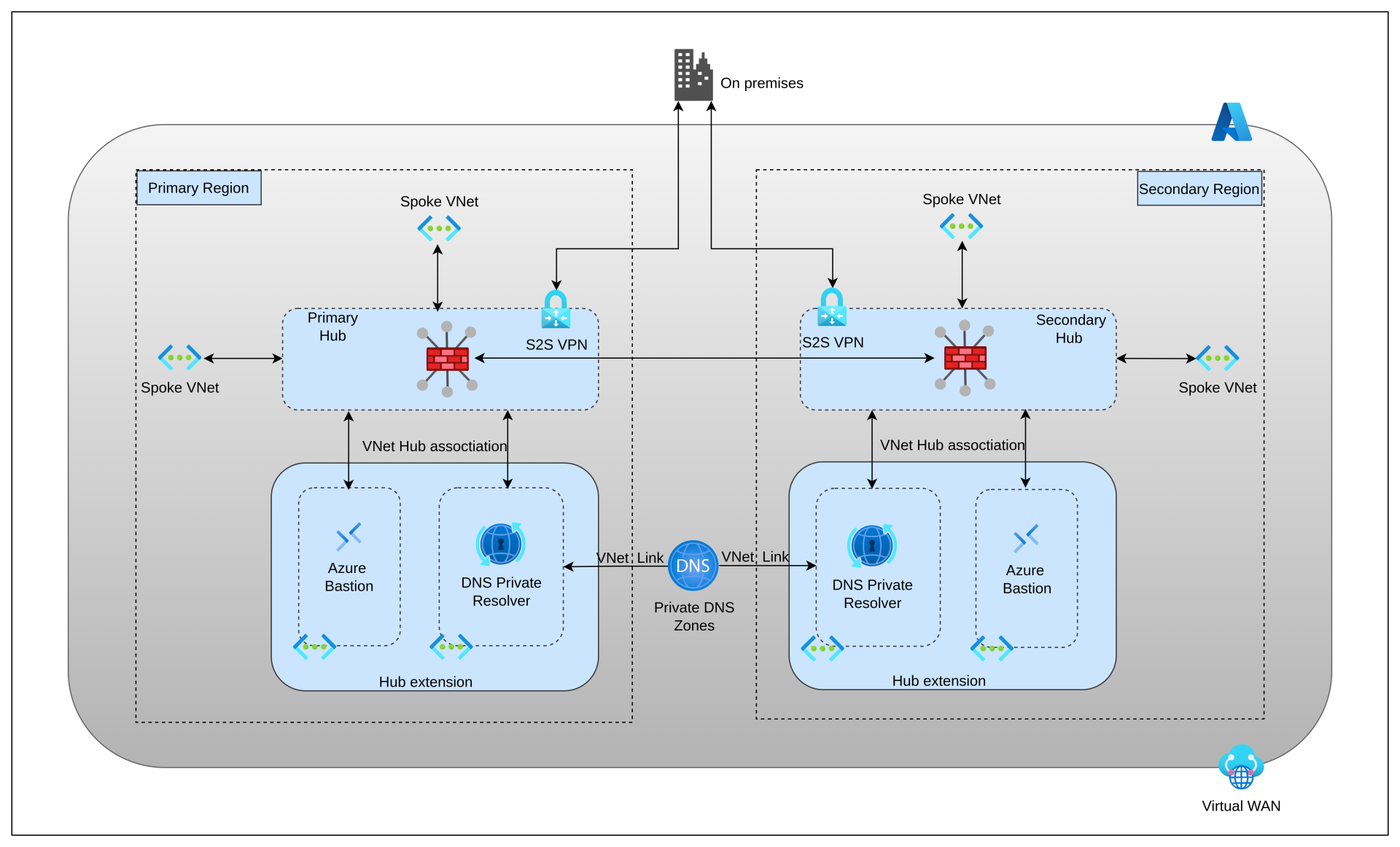

The Connectivity Subscription facilitates connectivity between various Azure networks and on-premises connections. There are two options for connectivity while deploying the Azure landing zone using the CAF module: traditional hub-spoke model and virtual WAN. We decided to go ahead with the VWAN model because it's fully managed by Microsoft and it eliminates the overhead of managing static routes.

Shared Resources

In a traditional hub-spoke topology, services such as DNS resources, customized network virtual appliances (NVAs), and Azure Bastion are shared across spoke workloads to improve cost-efficient resource utilization. Since we had opted for Virtual WAN, we had limitations in deploying the shared resources to the hub network. We addressed this by implementing the Virtual Hub Extension pattern. Separate VNets were established for each shared resource in the primary and secondary regions, and connected to the respective hubs.

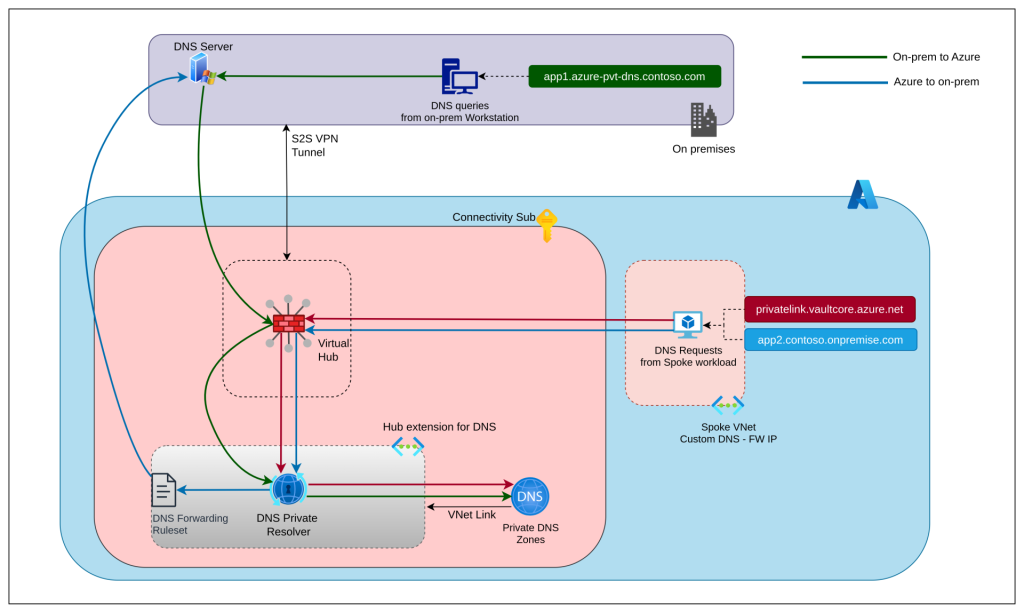

DNS Flow

To handle the resolution of DNS queries across on-premise domains and Azure Private DNS Zones, we designed a DNS flow. All VNets initially forward the DNS queries to the Azure firewall (DNS proxy) associated with the virtual hub. A DNS Private Resolver deployed as part of the hub extension facilitates the DNS flow across the entire intranet. Any Private DNS zone created will be linked to the DNS Private Resolver’s VNet. This includes custom Private DNS Zones as well as default zones created for Private Endpoints.

For Azure to on-premise DNS resolution, we created a DNS forwarding ruleset and associated it with DNS Private Resolver. This directs DNS queries for on-premise domains to the DNS server hosted on the Domain Controller.

For on-premise to Azure DNS resolution, we created conditional forwarders on the existing on-premise DNS server. They direct queries for Azure Private domains to the DNS Private Resolver.

Identity and Access Management

We set up Domain Controllers within the identity subscription using Windows virtual machines to extend the on-premise Active Directory service to Azure. This enabled the synchronization of user and computer accounts between on-premises and the cloud. Additionally, we ensured the high availability of the Domain Controllers by deploying the VMs in the primary and secondary regions across multiple availability zones.

Management Design

To ensure compliance and effective monitoring, we created a central log analytics workspace within the Management subscription. This served as a platform for gathering metrics and logs from diverse Azure resources across subscriptions.

Leveraging Azure policies from CAF, we configured diagnostic settings on every Azure resource to seamlessly collect logs and metrics to the central log workspace. Data collection rules were implemented on a few resources to collect additional logs and metrics. We also forwarded all these logs to the client’s centralized Security Information and Event Management (SIEM) platform using Log Workspace Data Export rules and Azure Event Hub.

Azure Policies

Azure Policies help enforce and assess compliance at scale. We tailored the Microsoft-recommended Azure policies in the CAF Terraform module to the client's requirements after a careful review. We then deployed these policies using the CAF IaC pipeline.

Custom IaC Pipelines

We designed additional custom pipelines to centrally manage critical tasks like onboarding spoke VNets and managing Firewall Policies and Private DNS Zone records.

- Virtual Network IaC pipeline: Creates new VNets and associates them with virtual hubs based on stakeholder requests.

- Firewall Policy IaC Pipeline: While the CAF module facilitates the deployment of primary and secondary firewall policies, ongoing policy management can be cumbersome. We introduced this pipeline to streamline the process.

- DNS IaC Pipeline: Manages DNS records in the custom Private DNS Zones created using CAF.

Check out Part 2, where we delve deeper into the migration’s execution phase.