Imagine a chatbot that can not only identify issues faced by tenants but also provide step-by-step instructions to resolve them. This isn't just a possibility; it's a reality.

Whether it’s resolving issues with home appliances, providing maintenance support, or answering general queries, our property management chatbot is equipped to handle them.

To achieve this level of functionality, a chatbot must go beyond simple response mechanisms. It should be able to handle unpredictable user interactions effectively. It should understand user intent, adapt to different conversational styles, and provide relevant responses.

Traditional rule-based and LLM-based chatbots have some inherent limitations that make them less effective in this regard.

Traditional rule-based and LLM-based chatbots have some inherent limitations.

Rule-Based Chatbots: Chatbots built with frameworks such as RASA rely on predefined intents and entities to provide responses. They are not particularly good at the nuances of human language. If a user phrases a problem differently, they may not recognize it and, worse, may provide an inaccurate response. Moreover, rule-based chatbots require extensive manual configuration and continuous updates to handle various expressions of the same problem.

LLM-Based Chatbots: Chatbots built using LLMs like OpenAI's GPT can generate more context-aware responses. Regardless, they are not always accurate, especially when dealing with highly specialized topics. In our context (property management), where technical details and product-specific knowledge are crucial, relying solely on an LLM may not always be wise.

Our Hybrid Approach to Chatbot Development

Our property management chatbot combines the rule-based structure of RASA with the flexibility of LLM, raising the range of queries it can handle. The structured nature of RASA enables precise intent recognition and clear conversational flows. LLM enables the chatbot to understand the many ways that people express their problems.

Consider these examples:

"Hey, my kitchen sink’s leaking. Can someone come fix it?"

"There’s an annoying drip from my sink. How do I make it stop?"

"My sink's acting up! Need help ASAP!"

The RASA + LLM combination pushes the boundaries of what ordinary chatbots can do.

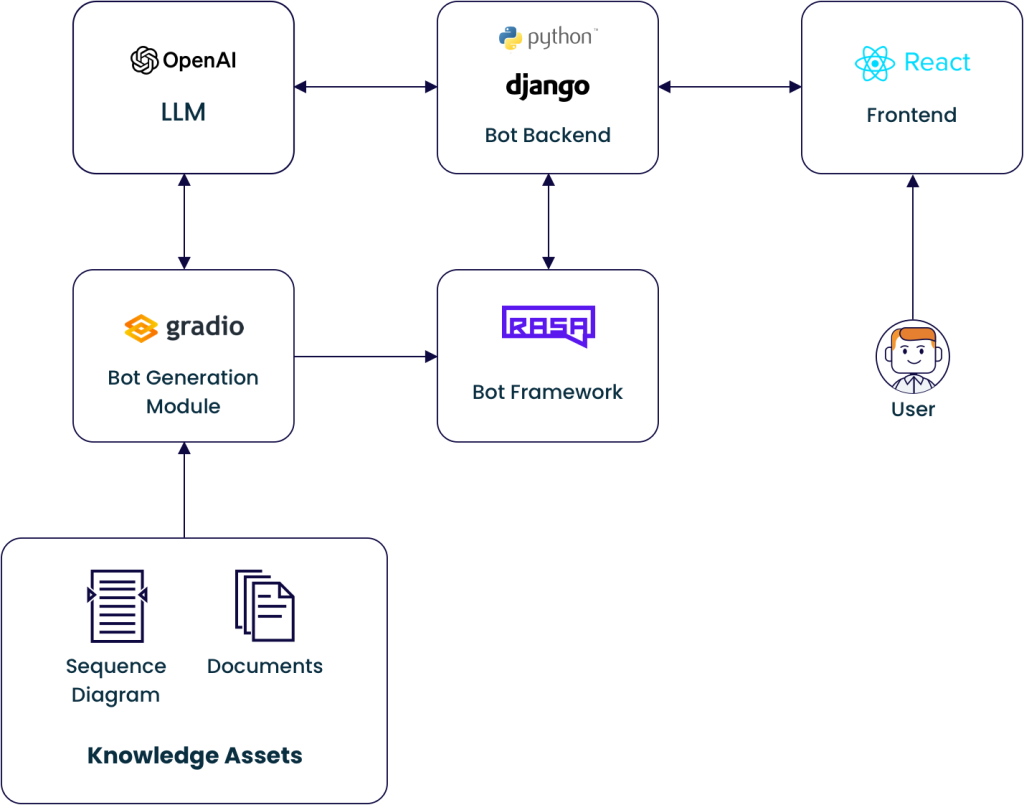

Chatbot Architecture

The hybrid chatbot architecture maximizes the strengths of different technologies while maintaining seamless interactions across them.

- Frontend: Users interact with the chatbot via a responsive and user-friendly interface. They can report issues, ask questions, and get troubleshooting guidance.

- Backend: The backend framework manages interactions between the interface, RASA, and the LLM. It processes user inputs and coordinates responses from RASA and LLM.

- Bot Framework (RASA): Intents, entities, and dialogue management are defined in RASA. RASA’s role is crucial in managing clear, structured conversations and executing specific actions based on user requests.

- Large Language Model: The LLM enhances the chatbot's ability to understand and respond to nuanced queries. It provides the flexibility to interpret out-of-context or unconventional user inputs.

- Knowledge Assets: Assets (such as documents and sequence diagrams) form the knowledge base for the chatbot. By regularly updating the knowledge assets, we ensure that the chatbot can handle new issues and scenarios without a hitch.

- Bot Generation Module: Once the knowledge assets are uploaded, the bot generation module processes them, extracts relevant information, and automatically generates RASA configuration files and conversational templates.

How Our Chatbot Outperforms Conventional Bots

Our property management chatbot stands out compared to those built exclusively with RASA or LLM for four principal reasons.

1. Contextual Understanding

The RASA and LLM combo helps the chatbot grasp user inputs, however worded, and guide users to a proper resolution. When the user inputs a problem, the backend framework forwards it to RASA, which attempts to match the input to one of its predefined intents. If RASA successfully identifies a matching intent, it handles the conversation flow as programmed. If it cannot classify the input, the input, along with all available intents, is sent to the LLM. The LLM analyzes the input in the context of all the available intents and identifies the most appropriate one. Once the LLM determines a suitable intent, it triggers it within the RASA framework, ensuring that the user receives a relevant response.

2. Intelligent Problem Resolution

Problem resolution has a direct impact on user experience and satisfaction, a key concern for property managers. This is how it works:

- Problem identification: The chatbot is smart enough to distinguish between problems that need expert intervention and those that can be resolved by the tenants themselves. For high-level issues that require a professional, the chatbot promptly creates a ticket.

- Step-by-step instructions for problem-solving: If an issue is simple and manageable, the chatbot offers easy-to-follow instructions to resolve it. For example, if a tenant has a thermostat that's not working properly, the chatbot can guide them through a quick troubleshooting process using visual aids such as GIFs and images.

- Critical issue management: The chatbot can recognize critical scenarios and instruct the tenant on necessary precautions, such as turning off specific valves or shutting down appliances to ensure safety.

- Ticket generation: For complex issues that tenants cannot resolve themselves, the chatbot automatically generates a ticket with all relevant details and routes it to the corresponding department.

3. Automated Bot Generation

Building chatbots with frameworks like RASA involves extensive manual configuration. Developers have to meticulously review client documents and manually set up conversational flows and configuration files. Needless to say, mapping out every potential interaction and response is time-intensive.

Our custom chatbot generation system addresses these challenges by automating the extraction of essential details from client documents. It uses LLMs to process the documents, leveraging their advanced language understanding capabilities. This makes document processing much easier, allowing us to extract the required information efficiently. The bot generation module then uses this information to automatically generate the files needed to update RASA’s intents, entities, and conversation templates.

Most cases require converting the extracted information into formats suitable for conversational interfaces. With the use of LLMs, this conversion becomes straightforward, as they can generate natural-sounding dialogues and responses based on the extracted data. This automation drastically reduces the time and effort required to set up and maintain the chatbot, allowing it to quickly adapt to new information and consistently meet user expectations.

4. Scalability and Flexibility

Last but not least, the chatbot can efficiently handle a wide range of user queries, from simple, well-defined issues to more complex, open-ended questions. The hybrid setup allows the chatbot to learn continuously from interactions, improving its accuracy and expanding its capabilities over time.

Beyond Property Management

Our chatbot, originally designed for real estate and property management, can significantly benefit other industries where customer service is crucial. Its core functionalities—handling inquiries and providing personalized support—are universally valuable after all. It can be tailored for industries like healthcare, banking, retail, and hospitality by integrating industry-specific knowledge assets into the bot generation module. This can automatically configure the chatbot with the relevant intents, entities, and dialogue flows relevant to each industry or business process. If comprehensive information is available in the knowledge assets, our chatbot can seamlessly adapt to the specific sector or use case.