At Google Cloud Next 2025 in Las Vegas, Google announced a series of developments that mark a major shift in how AI systems are designed and deployed. Among them is Agent-to-Agent (A2A) protocol, an open standard that enables AI agents to collaborate securely and autonomously.

Why A2A Protocol?

As AI agents evolve from single-task tools to collaborative entities, robust protocols for coordination and communication between agents have become an imperative. Without a shared protocol, these interactions could be brittle and insecure. A2A addresses this by standardizing how agents work together.

Think of a travel planner made up of agents. One agent could specialize in local weather and event data. Another might handle bookings across airlines and hotels. A third could manage itinerary optimization based on user preferences. These agents, built by different vendors and hosted on various platforms, can discover each other and coordinate in real-time using the A2A protocol.

How Does It Work?

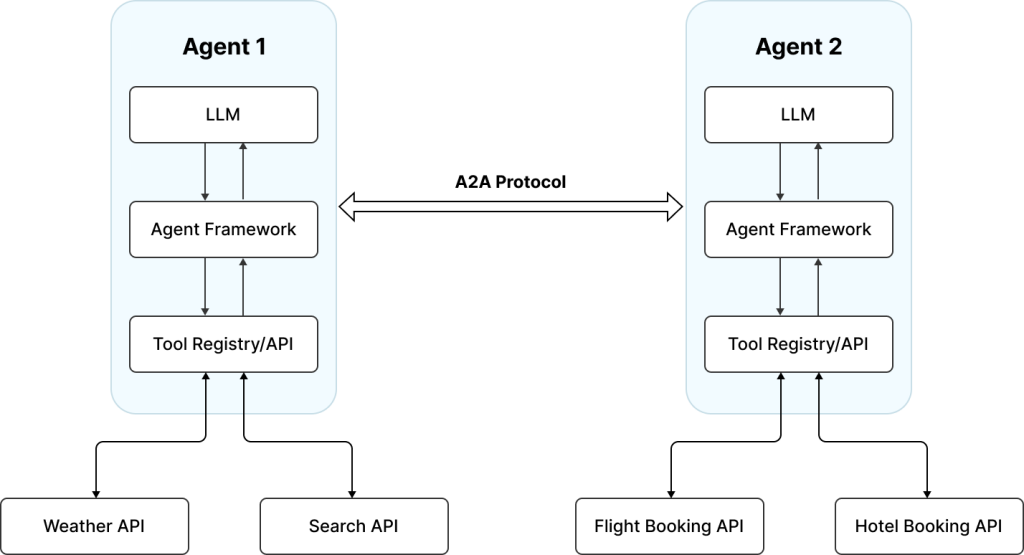

The A2A protocol defines how AI agents discover each other, share their capabilities, and exchange messages regardless of who built them, what models they use, or where they are hosted. It enables a client agent to delegate a task to a remote agent, which performs the work and returns results.

This interaction unfolds in four stages:

Capability Discovery

Each agent publishes an Agent Card, a machine-readable JSON file, that describes what it can do. When a task arises, the client agent scans available cards to find a remote agent with the right capabilities.

Task Management

Tasks are formalized as structured objects with a defined lifecycle (created, in progress, completed). The client assigns the task, and both agents exchange updates to track its status. Tasks can be short-lived or long-running.

Collaboration

Agents communicate through structured messages that carry context, prompts, or results. These may include intermediate artifacts such as text, images, or data, enabling richer, more coordinated workflows.

User Experience Negotiation

To ensure outputs are rendered correctly across systems, agents divide results into parts (text, image, form, etc.) and describe each part’s type and format. This allows receiving agents to decide how best to present the response.

Every agent can expose only selected capabilities through its Agent Card, limiting what other agents can invoke. Conversations are signed and tracked, and agents can include identity proofs (that is, OAuth tokens or digital signatures) to verify who they are talking to.

Agent Collaboration Example: Weather and Travel Recommendation

To demonstrate how A2A works, let’s build a system with two agents:

- Weather agent, a lightweight HTTP server built with

python_a2a, a messaging abstraction layer. It listens for city names and responds with current temperature and wind data. It uses static coordinates for four cities (London, New York, Paris, Tokyo) and fetches data from the Open-Meteo API. - User agent sends a request to the weather agent, receives the weather report, and then uses ollama to query a local LLM for a recommendation.

Dependencies and Setup

Install required packages:

pip install python-a2a requests ollamaollama pull deepseek-r1:1.5bDownload and install Ollama if not already installed.

Create two files in your working directory:

weather_agent.pyuser_agent.pyFile Structure

weather_agent.py # The agent providing weather data (server)

from python_a2a import A2AServer, Message, TextContent, MessageRole, run_server

import requests

from python_a2a import skill,agent

# Map city names to coordinates for Open-Meteo

CITY_COORDS = {

"london": (51.5074, -0.1278),

"new york": (40.7128, -74.0060),

"paris": (48.8566, 2.3522),

"tokyo": (35.6762, 139.6503)

}

def get_weather(city: str) -> str:

city = city.lower()

if city not in CITY_COORDS:

return "Sorry, I only know the weather for London, New York, Paris, or Tokyo."

lat, lon = CITY_COORDS[city]

url = (

f"https://api.open-meteo.com/v1/forecast"

f"?latitude={lat}&longitude={lon}¤t_weather=true"

)

try:

response = requests.get(url)

data = response.json()

current = data.get("current_weather", {})

temp = current.get("temperature")

wind = current.get("windspeed")

condition = current.get("weathercode", "unknown")

return f"The current temperature in {city.title()} is {temp}°C with wind speed {wind} km/h."

except Exception as e:

return f"Failed to fetch weather: {str(e)}"

@skill(

name="Get Weather",

description="Get current weather for a location",

tags=["weather", "forecast"],

examples="I am a weather agent for getting weather forecast from Open Meteo"

)

@agent(

name="Get Weather Agent",

description="Get weather information for a specified city",

version="1.0.0",

url="https://sampledomain.com")

class WeatherAgent(A2AServer):

def handle_message(self, message: Message):

city = message.content.text.strip()

reply = get_weather(city)

return Message(

content=TextContent(text=reply),

role=MessageRole.AGENT,

parent_message_id=message.message_id,

conversation_id=message.conversation_id,

)

if __name__ == "__main__":

run_server(WeatherAgent(), host="localhost", port=5000)

Here’s the JSON representation of the agent card:

{

"name": "Get Weather Agent",

"description": "Get weather information for a specified city.",

"url": "http://localhost:5000",

"version": "1.0.0",

"skills": [

{

"name": "Get Weather",

"description": "Get current weather for a location",

"examples": [

"What's the weather in London?",

"Show me the weather forecast for Paris.",

"I am a weather agent for getting weather forecast from Open Meteo"

],

"tags": ["weather", "forecast"]

}

]

user_agent.py (client agent)

from python_a2a import A2AClient, Message, TextContent, MessageRole

import ollama

def generate_response_with_llm(city: str, weather_info: str) -> str:

prompt = (

f"A user is considering traveling to {city}. "

f"The current weather information is: {weather_info}\n\n"

f"Based on this weather, give a natural, friendly, and helpful recommendation "

f"on whether it is a good idea to travel there right now. "

f"If the weather seems dangerous or unpleasant, advise caution or postponement. "

f"If it's nice or tolerable, encourage the trip. Keep the tone warm and informative."

)

response = ollama.generate(

model='deepseek-r1:1.5b',

prompt=prompt,

options={'temperature': 0.2, 'max_tokens': 2000}

)

return response['response']

def main():

client = A2AClient("http://localhost:5000")

city = input("Enter city (London, New York, Paris, Tokyo): ")

msg = Message(content=TextContent(text=city), role=MessageRole.USER)

response = client.send_message(msg)

raw_weather = response.content.text

print("\n[Raw weather data from agent]:", raw_weather)

friendly_output = generate_response_with_llm(city, raw_weather)

print("\n[LLM-enhanced explanation]:", friendly_output)

if __name__ == "__main__":

main()

Start the weather agent server and the client agent :

python weather_agent.py

python user_agent.pyThe system will fetch raw weather data from the weather_agent.py and use the model deepseek-r1:1.5b via Ollama to generate a travel recommendation.

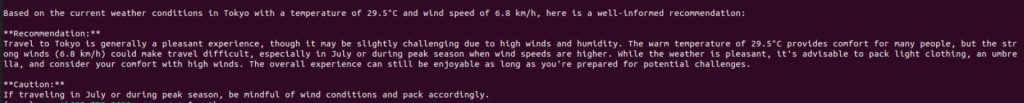

The output produced by this A2A-based workflow:

It suggests that travel to Tokyo is generally pleasant, though high winds and humidity may make it slightly challenging. The recommendation advises packing light clothing and an umbrella, and notes that with minimal preparation, the trip can be enjoyable.

While this implementation is limited to two agents, it is feasible to develop multiple agents capable of supporting a wide range of real-world applications.

For those looking to build with A2A, Google provides an official Python SDK.

A2A and MCP: How They Relate

Model Context Protocol (MCP), introduced by Anthropic, defines how agents can plan and coordinate actions involving external APIs, files, databases, or other LLMs. It allows a single agent to reason more effectively by grounding its decisions in structured data or tool outputs.

A2A is framework-agnostic. It enables agents built with different runtimes or libraries, such as openai-agents, langgraph, or custom implementations, to discover each other, communicate, and collaborate over a shared protocol. This compatibility lowers the barrier for teams to integrate existing tools or frameworks, regardless of their internal architecture.