Integrating Large Language Models (LLMs) with custom data sources requires writing extensive function definitions and implementing them manually. This process has to be repeated for every use case, making developing real-time AI applications with two-way communication cumbersome. Model Context Protocol (MCP), released by Anthropic, offers a standard way to connect AI systems with their data sources, eliminating the need for repetitive coding.

By organizing the fragmented data connection to LLMs, MCP can boost the efficiency and possibilities of AI-powered systems. As an open-source project not limited to Anthropic's models, it can become a universal standard for connectivity to LLMs.

How MCP Works

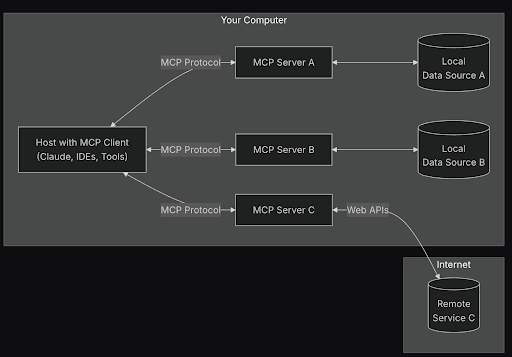

Anthropic's general architecture follows the structure outlined below.

- MCP Hosts: Applications such as Claude Desktop, IDEs, or AI tools that require access to custom data sources.

- MCP Clients: Protocol clients that establish one-to-one connections with MCP servers. Typically, they function as a service or component within MCP Hosts.

- MCP Servers: Lightweight programs designed to expose specific capabilities through MCP. They provide context and functionalities essential to the MCP flow.

- Local Data Sources: Files, databases, and services accessible via MCP servers.

- Remote Services: External APIs and online resources connected via MCP servers.

A comprehensive list of example MCP servers and local data sources can be found here.

A Real-World Example: Fetching Live Flight Data

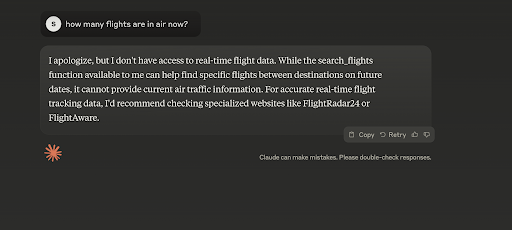

To demonstrate MCP in action, let’s use a remote service: an aviation API that provides real-time flight data. Without MCP, Claude or any LLM would be unable to answer a simple query like “How many flights are in the air right now?” due to the lack of real-time data access. With MCP, we can bridge that gap.

Let’s tap into a free API from the aviation stack that lists all flights in the air. For simplicity (to avoid pagination logic), let’s set the default limit of 100 results on a single page.

The code looks like this:

import requests

import os

from dotenv import load_dotenv

# Load environment variables

load_dotenv()

def get_flights():

params = {

'access_key': os.getenv('AVIATION_ACCESS_KEY')

}

api_result = requests.get('https://api.aviationstack.com/v1/flights', params)

api_response = api_result.json()

return api_response

if __name__ == "__main__":

print (get_flights())

uv run aviation.py

Note: We are using “uv” for Python here, but alternatives are also fine. “uv run” is easier to execute in the MCP context, which I will explain below. It’s also the preferred package manager for MCP Python SDK.

The dataset includes 291,627 entries, but we will focus on the first 100. These entries provide detailed information about flights currently in the air.

"pagination": {

"limit": 100,

"offset": 0,

"count": 100,

"total": 291627

},

"data": [

{

"flight_date": "2025-01-31",

"flight_status": "scheduled",

"departure": {

"airport": "Francisco Bangoy International",

"timezone": "Asia/Manila",

"iata": "DVO",

"icao": "RPMD",

"terminal": null,

"gate": null,

"delay": null,

"scheduled": "2025-01-31T03:35:00+00:00",

"estimated": "2025-01-31T03:35:00+00:00",

"actual": null,

"estimated_runway": null,

"actual_runway": null

},

"arrival": {

"airport": "Ninoy Aquino International",

"timezone": "Asia/Manila",

"iata": "MNL",

"icao": "RPLL",

"terminal": "2",

"gate": null,

"baggage": null,

"delay": null,

"scheduled": "2025-01-31T05:25:00+00:00",

"estimated": "2025-01-31T05:25:00+00:00",

"actual": null,

"estimated_runway": null,

"actual_runway": null

},

"airline": {

"name": "ANA",

"iata": "NH",

"icao": "ANA"

},

"flight": {

"number": "5262",

"iata": "NH5262",

"icao": "ANA5262",

"codeshared": {

"airline_name": "philippine airlines",

"airline_iata": "PR",

"airline_icao": "PAL",

"flight_number": "2822",

"flight_iata": "PR2822",

"flight_icao": "PAL2822"

}

},

"aircraft": null,

"live": null

}

For context, if we were to query Claude about the current count of flights in the air before MCP implementation, the question could not be answered due to the absence of real-time data access.

Let’s add MCP to the code, just a few lines.

First import the MCP SDK and initialize MCP with the name “aviation.” The name is just a key that we can refer to in Claude's desktop configuration.

from mcp. server.fastmcp import FastMCP

mcp = FastMCP("aviation") # Server name for config file

Next, annotate the method that we are exposing as the MCP tool. MCP server can mainly expose a resource, tool, or prompt.

Resources function similarly to GET endpoints in a REST API providing data without performing significant computations.

Tools let LLMs take actions through the server. Unlike resources, tools are expected to perform computation and take actions.

Prompts are reusable templates that help LLMs interact with your server effectively.

When integrated with an external API call, the tool ensures optimal decision-making.

@mcp.tool()

def get_flights():Now, we need to modify the main block with mcp.run(). This starts the MCP server when the script is run, enabling communication with Claude. Here we use stdio as a transport mode.

if __name__ == "__main__":

print (get_flights())

mcp.run(transport='stdio')Now we need to register the server we created with the client. The client we are going to use here is Claude Desktop.

We can use the MCP command to install our MCP server.

MCP install aviation.py --name "My real-time flight data"This will create a config file in

~/Library/Application Support/Claude/claude_desktop_config.json

with content:

{

"mcpServers": {

"realtime-aviation-server": {

"command": "uv",

"args": [

"--directory",

"/Users/srijithvijaymohan/Work/Projects/mcp-example/travel",

"run",

"aviation.py"

]

}

}

}This tells the client (Claude desktop) that an MCP local server named “realtime-aviation-server “ can be invoked with the UV command from the file provided in the path.

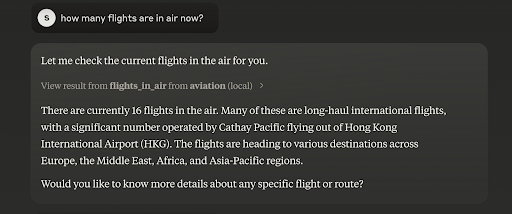

Relaunch Claude's desktop and initiate the same prompt we tried earlier. Now that Claude recognizes the context matches the registered local server, Claude can utilize local server for data. The system will request user permission before execution, after which real-time data will be retrieved from the local server.

If needed, you can view the raw response from the local server. Claude processes this data, aligns it with the prompt, and presents a clear, human-readable answer.

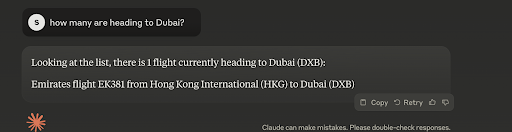

You can also ask follow-up questions about the retrieved data without triggering another query. Since Claude retains the context, it can respond using the existing data rather than re-requesting it from the server.

This implementation may appear limited due to its dependency on Claude Desktop, which restricts certain use cases. However, it is also possible to develop an MCP Client, similar to the MCP Server we constructed. This would unlock a wide range of real-world applications.

Please note that as MCP continues to evolve rapidly, features or functionalities described in this article may change.