This article explains how enterprises can build a reliable AI Knowledge Platform that transforms organizational data into institutional intelligence. It outlines the design of a Domain Intelligence Layer, a contextual engine that embeds an organization's business logic, workflows, and governance into AI systems. The framework uses a model-agnostic, cloud-native architecture (Azure, AWS) with role-based access control (RBAC) for secure, context-aware knowledge retrieval. Covering principles of agility, data integration, and privacy, the post shows how organizations can advance from isolated AI tools to scalable, auditable systems that reflect their organizational DNA.

Making GenAI Useful Across the Enterprise

Over the past year, enterprises have learned that adopting generative AI is easy. But turning it into a dependable source of intelligence for everyone in the organization? Not so much.

A sales leader may want insights into pipeline health. A developer may need the right API documentation. Each expects quick and contextually precise answers. Delivering that requires more than connecting to a single API or model. It demands the creation of a living, learning Institutional Knowledge Platform that understands your business’s unique DNA.

This article outlines how such a platform can be designed and scaled. It explores the foundational principles and architectural choices that make enterprise-wide intelligence both possible and sustainable.

How to Design Knowledge Platforms?

With new models arriving every few months with unprecedented speed and specialization, locking your enterprise knowledge strategy to one vendor or model is a major risk. The solution? Build agility directly into your architecture.

This comes down to three design principles:

1. Model Agnosticism

The platform must be flexible enough to leverage the right model for the right task. A large language model might handle complex legal analysis, while a lightweight model can take on basic data tasks. This pluggable architecture can optimize performance and costs while preventing vendor lock-in.

2. Context-Aware Access

Role-based access control is about security as well as relevance. The platform should know who is asking, why, and what they are authorized to see. A CFO and a marketing manager might ask the same question (“How did we perform last quarter?”). Their answers must reflect entirely different data views, one focused on margins, the other on campaign metrics.

3. Seamless Data Integration

An AI platform can only be as intelligent as the data it draws from. True enterprise intelligence demands deep, real-time integration with systems like Salesforce, SharePoint, Jira, HRIS, File drives, and code repositories. The goal is to build a unified, continuously updated knowledge layer that turns fragmented information into actionable insight.

Building on the Right Cloud Infrastructure

The architectural principles of agility, security, and contextual intelligence all depend on a strong foundation. Cloud ecosystems like Microsoft Azure and AWS provide that base. Azure AI Foundry offers a modular approach with its Model Catalog, Prompt Flow, and Azure AI Search, enabling fine-grained control and data-connected RAG (Retrieval-Augmented Generation). Amazon Bedrock simplifies orchestration through its Agents and Knowledge Bases, providing managed model access across vendors like Anthropic, Meta, and Amazon.

These platforms deliver the engine, infrastructure, orchestration, and scale. But what they don’t have is your organization’s unique intelligence or organizational DNA. That must come from within.

Embedding Organizational DNA

Every organization has its own DNA, the internal knowledge that defines how it sets priorities, approves decisions, and even communicates. Generic AI models can’t embody your way of working unless explicitly taught.

Generic AI models can’t embody your way of working unless explicitly taught.

To make AI systems genuinely useful, that DNA must be embedded through a Domain Intelligence Layer, a contextual engine that sits on top of the cloud AI infrastructure.

The Domain Intelligence Layer:

- Interprets user queries through the lens of business roles and policies.

- Shapes responses using the organization’s own language, workflows, and tone.

- Applies logic that reflects how your teams actually make decisions.

Balancing Data Access and Privacy

An effective AI knowledge platform requires a multi-domain architecture with Role-Based Access Control (RBAC). This approach offers the necessary flexibility to manage information access, ensuring that all employees have access to universally required knowledge, while departmental and individual access is tailored to specific needs.

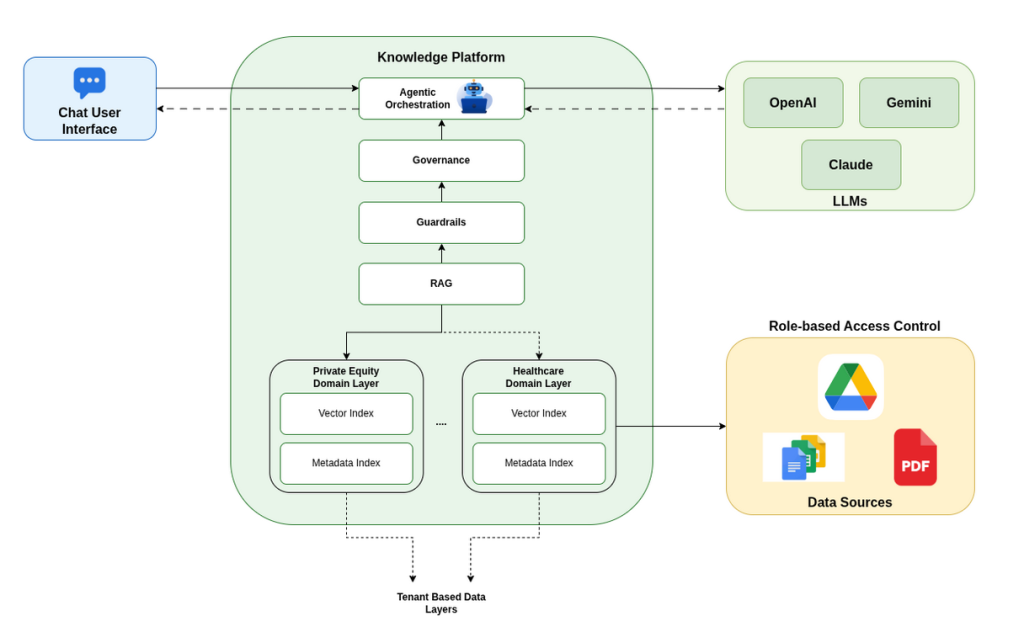

In the reference architecture shown below, RBAC is built into every layer to ensure that users can access the knowledge they are authorized to see while keeping sensitive data insulated by role, function, and policy.

When a user types a message in the chat interface, it’s securely sent to the Knowledge Platform. The message first passes through input guardrails that check for sensitive or unsafe content, and then moves to the agentic orchestration layer, which analyzes the intent and decides how to answer the query.

Depending on the context, it might trigger RAG to fetch relevant information from domain-specific data layers such as Private Equity or Healthcare, each equipped with a vector index for semantic search and a metadata index for contextual filtering. The system enforces RBAC to ensure the user only accesses content they are authorized to view from data sources like Google Drive, Docs, or PDFs.

Once the relevant documents are retrieved, the orchestrator combines them with the selected LLM, such as OpenAI ChatGPT, Gemini, or Claude, to generate a well-grounded, context-aware answer.

This response is then passed back through governance and output guardrails for compliance, redaction, and quality checks before being streamed back to the user interface. Throughout this process, all actions are logged for audit and governance, ensuring accuracy, safety, and transparency in every response.

Time to Move from Tools to Thinking Systems

The real promise of enterprise AI lies beyond automation or retrieval. It lies in constructing systems that understand how the organization thinks, not just what it stores. When AI systems internalize an enterprise’s logic, vocabulary, and values, they evolve from information tools into institutional partners.

In essence, this is no longer about chatbots or RAG pipelines. It’s about building a thinking system, one that connects people, data, and context through a continuously learning ecosystem. As enterprises design for agility, security, and relevance, this fusion of human knowledge and machine intelligence will define the next frontier of organizational capability.