This article explores how we built a multi-LLM enterprise AI assistant that transforms institutional knowledge into actionable intelligence. Designed for a global sports analytics company, the assistant integrates multiple large language models with strict governance, reasoning control, and role-specific knowledge management. By leveraging our Institutional Knowledge Platform (IKP), the client achieved faster deployment, seamless user experience, and responsible AI at scale.

The Case for Enterprise AI Assistants

AI assistants are changing how enterprises access their intellectual capital. Acting as personalized curators, they organize and deliver information, with context intact, for faster and informed decision-making.

Our client, a global sports analytics company, wanted an AI assistant with that precision. The assistant had to support everyday decisions while staying true to the company’s voice and standards. A marketing manager, for example, should receive guidance consistent with the brand’s strategy, tone, and policies, rather than generic advice.

Criteria That Shaped Our Architecture

The solution had to meet two critical requirements to ensure technical robustness and enterprise readiness:

- Model Interoperability & Continuity: The architecture had to integrate with multiple Large Language Models (LLMs) to give the client more flexibility to choose the most capable model depending on the scenario, without being limited to a single provider. This switching capability also had to preserve complete conversational continuity.

- Strict Governance: Data compliance and security had to be built into the system. Beyond protecting Personally Identifiable Information (PII) and intellectual property (IP), the client wanted to enforce responsible use of internal knowledge in line with organizational privacy and confidentiality policies.

The client also needed a central administration console to manage evolving knowledge bases and role-specific instruction sets. The interface should allow authorized users to update and configure the system independently, without developer involvement.

Our Design Approach

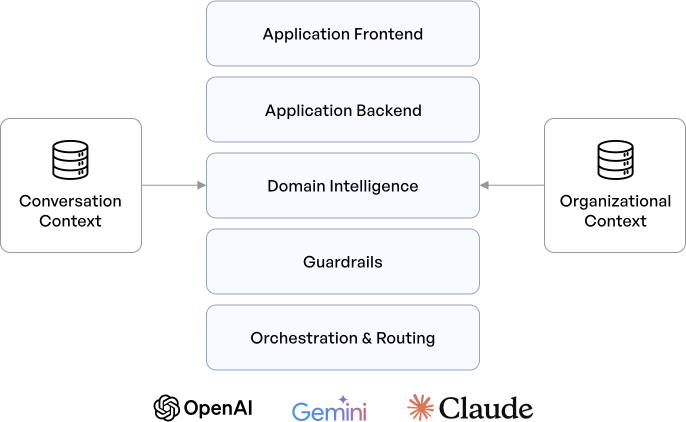

To meet the client's requirements, we engineered a two-tiered architecture on top of existing cloud-based language model APIs.

- Domain Intelligence Layer: This ensures every response is meticulously filtered to reflect the organization’s specific policies, brand voice, and internal standards.

- LLM Orchestration Layer: The decision engine that dynamically selects the optimal model (OpenAI, Gemini, or Claude) and defines the necessary depth of reasoning for the task at hand.

Rather than building from scratch, we extended our Institutional Knowledge Platform (IKP), an accelerator, for contextual reasoning and secure enterprise knowledge. We rapidly evolved the IKP into a Multi-Provider Executive AI Framework that balances flexibility with robust governance.

Rather than building from scratch, we extended our Institutional Knowledge Platform (IKP), an accelerator, for contextual reasoning and secure enterprise knowledge.

Key architectural enhancements included the following:

- Provider-Agnostic Runtime: Enables real-time switching between multiple LLMs while maintaining complete conversation context and continuity.

- Reasoning Controller: Offers configurable reasoning depth, allowing the system to use the most efficient method for the task (for example, Minimal, Chain-of-Thought, Tree-of-Thought).

- Guardrail Engine: Enforces Responsible AI by automating tasks like PII masking, IP protection, and mandatory policy adherence.

In addition to the core orchestration and reasoning features, we also built a dedicated Admin console to manage knowledge configuration and validate system integrity. Its features include:

- Knowledge Configurator: Allows administrators to upload, manage, and precisely assign knowledge bases per user role, ensuring relevance and security.

- Self-Test Runner: Validates provider health, compliance, and data integrity through automated tests.

This architecture brought together the intelligence of multiple models with the control and auditability the enterprise needed.

How the AI Assistant Works

For every query, the system follows a defined workflow.

- Query & Context: When a user submits a query, the system retrieves their specific role configuration and assigns a knowledge base (KB) to ground all potential responses in the organization's domain context.

- Governance Enforcement: The Guardrail Engine validates the input query for any sensitive or restricted content (PII, IP, policy violation) before any model call is made. This crucial step ensures Responsible AI compliance from the start.

- Reasoning Selection: The Reasoning Controller dynamically determines how deeply the system needs to "think," from generating fast summaries for simple queries to initiating structured, multi-step reasoning for complex ones. This approach optimizes resource use by aligning computational effort with query complexity.

- Provider Orchestration: The Provider-Agnostic Runtime selects the optimal LLM for the task, while seamlessly preserving the user's session memory and intent.

- Response & Traceability: The chosen model executes the validated query. The final response is automatically tagged with the model provider, the reasoning path used, and the KB sources, keeping them transparent.

Responsible AI: Always at the Core

Every product we build embeds Responsible AI, not as an afterthought, but as an operational layer. In this implementation, that meant implementing guardrails before model execution. Every system decision—which model was chosen, the depth of reasoning applied, and which knowledge bases were consulted—is logged. Automated validation through the Self-Test Runner further strengthens this governance framework. This approach helped the client scale AI responsibly while maintaining confidence in governance and accuracy.

What Changed for the Client After Deploying the AI Assistant?

Leveraging the Institutional Knowledge Platform (IKP) as the base architecture helped us achieve a 50% faster delivery cycle, allowing the client to operationalize their AI strategy sooner.

From a user’s perspective, the assistant feels seamless. Despite the complex workflow spanning multiple models, reasoning layers, and governance checks, it behaves like a single intelligent assistant that intuitively grasps context and intent.

For the enterprise, that same workflow operates within a tightly governed framework where every action is traceable, explainable, and compliant by design. The assistant now supports complex, day-to-day decision-making and scales confidently within the compliance standards of a global organization. Today, the client’s executive, analyst, and strategy teams all use the system, each supported by an assistant configured with its own dedicated knowledge base and instruction set.