The Akka Advantage

In the Akka framework, we have a contender that promises to absolve us of these nuances that plagued developers in the past. Akka is an event-driven toolkit for building reliable and distributed applications in Java. All the complexities of thread creation, message handling, race conditions, and synchronization are handled transparently by Akka. Along with guaranteeing real-time delivery, the Akka system enables us to balance the load as well as scale up or down. A message-driven architecture provides the overall foundation for Akka-based systems. If any new functionality is introduced, the system adapts accordingly, saving us the hassle of reengineering the whole system. Akka, being built on a message-driven platform, lets us build applications with minimal latency compared to legacy systems. Its unique blend of functional programming with actors lets us write code that is easy to comprehend and test as well as handle failures better compared to traditional JVM systems. The "let it crash" philosophy of Akka helps us to build applications that heal on their own and never stop running. This blog examines the Akka architecture and explores the ways in which it facilitates the development of distributed, real-time, and fault-tolerant trading application.Application Requirement

The requirement was to provide stock market trading data to our clients with minimum delay. Even a millisecond delay could adversely affect the day trading performance of our clients. So we set out to build a system that has no downtime and is elastic and reactive.What We Built

The system we built contains multiple applications coalescing as a single unit and reacting to its surroundings while remaining aware of each other’s existence. They interact with each other internally to carry out complex tasks. We chose reactive programming for developing critical components (based on message-passing) that allowed them to be decoupled and developed separately. Decoupling was required to isolate components and meet our requirements of resilience and elasticity.Akka Actors to the Rescue!

The application logic resides in lightweight objects called actors, which send and receive messages. They have a very low memory footprint and do not have a direct mapping with threads on a VM. In a standard application of Akka, it is possible to create millions of actors in a single VM, many of which will not be doing anything unless a message is sent to them. 1 GB memory can fit in around 2.7 million actors whereas only 4096 threads can be created using a legacy approach on a single VM. Everything done by the actors is asynchronous so they can send messages without waiting for a reply. We can easily configure an actor's life cycle and failure handling using the different supervision strategies provided by Akka. The trading application we developed has two main workflows:- Real-time trade rate updates

- Order processing or handling the trade done by the user

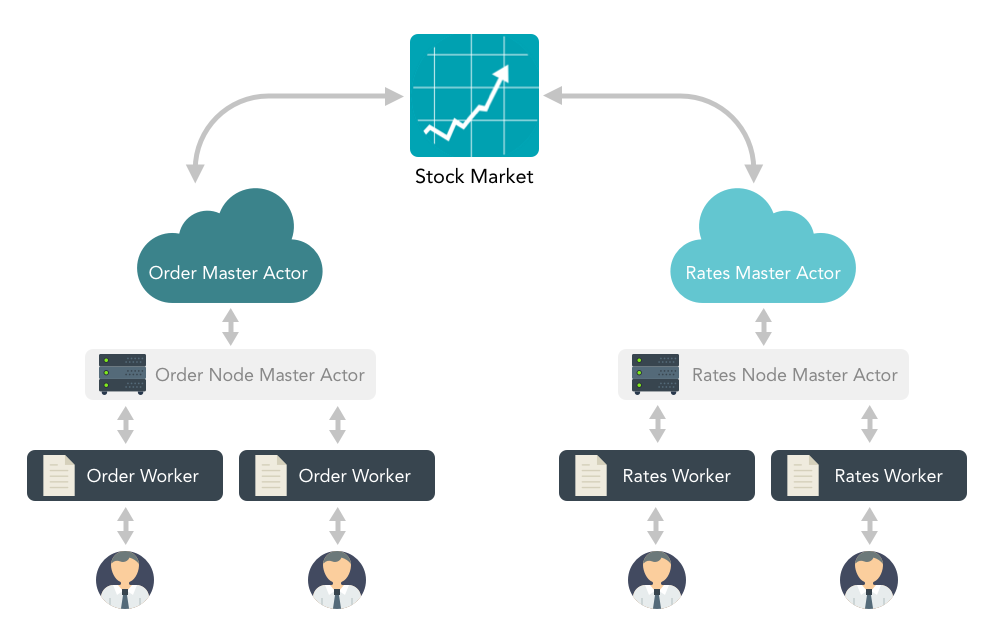

Akka actors in the Rate and Order workflows

The two-actor system we developed consists of a master actor that resides in the trading client module and a cluster manager actor for each instance in the cluster. Then there are the worker actors who do the actual business logic and are spawned by cluster managers. This structure of loosely coupled Akka actors with adaptive routing gave us a lot of flexibility in scaling the cluster up or down. Our application needed to scale up when users logged into the system and not slow down if the number of users increased. Also, the trades placed by the users had to be processed without any delay. An Akka-based solution was perfect for this job. When the users log out, we can proceed to scale down by killing the actors and freeing up the resources.