In the world of big data analytics, PySpark, the Python API for Apache Spark, has a lot of traction because of its rapid development possibilities. Apart from Python, it provides high-level APIs in Java, Scala, and R. Despite the simplicity of the Python interface, creating a new PySpark project involves the execution of long commands. Take for example the command to create a new project:

$SPARK_HOME/bin/spark-submit \ --master local[*] \ --packages 'com.somesparkjar.dependency:1.0.0' \ --py-files packages.zip \ --files configs/etl_config.json \ jobs/etl_job.py

It is NOT the most convenient or intuitive method to create a simple file structure.

So is there an easy way to get started with PySpark?

Introducing PySpark CLI— a tool to create and manage end-to-end PySpark projects. With sensible defaults, it helps new users to create projects with short commands. Experienced users can use PySpark CLI to manage their PySpark projects more efficiently.

PySpark CLI generates the project folder structure along with the required configuration files and boilerplate code with which you can dive right into your project. The folder structure is designed for easy understanding and customization so you can make changes suited to the project you’re working on. Even as is, the folder structure is suitable for projects covering various applications.

We have a video tutorial that shows how you can start your project with PySpark CLI. If you are new to Python and PySpark, watch our Quick Intro to PySpark.

Writing Your First PySpark App

Let’s learn by example. We assume you have PySpark installed already. You can tell PySpark is installed by running the following command in a shell prompt (indicated by the $ prefix):

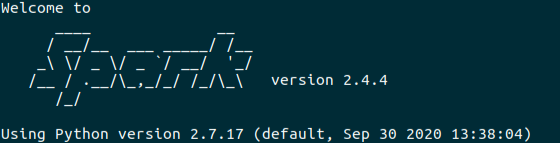

$pyspark

If PySpark is installed, you should see the version of your installation. If it isn’t, you’ll get an error. This tutorial is written for Spark 2.4.4, which supports Python 2.7.15 and later versions.

Environment Setup

Let’s set up the environment required for working with PySpark projects. For installation on Ubuntu, follow these steps:

- Download and install JDK 8 or above. Before you can start with Spark and Hadoop, you need to make sure you have java 8 installed. You can check this by running the following command:

java -version - Download and install the latest distribution of Apache Spark.

- Create a directory “spark” with the following command in your home.

mkdir spark - Move spark-2.4.4-bin-hadoop2.7.tgz in the spark directory:

mv ~/Downloads/spark-2.3.0-bin-hadoop2.7.tgz spark

cd spark/

tar -xzvf Spark-2.4.4-bin-hadoop2.7.tgz

After extracting the file go to bin directory of spark and run ./pyspark. It will open the following pyspark shell: pyspark_shell

Configure Apache Spark. Now you should configure it in path so that it can be executed from anywhere.

- Open bash_profile file:

vi ~/.bash_profile - Add the following entry:

export SPARK_HOME=~/spark/spark-2.4.4-bin-hadoop2.7/ export PATH="$SPARK_HOME/bin:$PATH" - Run the following command to update PATH variable in the current session:

source ~/.bash_profile - After next log in you should be able to find pyspark command in path and it can be accessed from any directory.

- Check PySpark installation: In your anaconda prompt,or any python supporting cmd, type pyspark, to enter the pyspark shell. To be prepared, it’s best to check it in the python environment from which you run jupyter notebook. You are supposed to see the following: pyspark_shell.

Test using the following commands. The output should be [1,4,9,16].

$ pyspark

>>> nums = sc.parallelize([1,2,3,4])

>>> nums.map(lambda x: x*x).collect()

To exit the pyspark shell, press Ctrl Z or use the python command exit().

Installing PySpark CLI

Using Source

git clone https://github.com/qburst/PySparkCLI.git

cd PySparkCLI

pip3 install -e . --user

Using PyPI

pip3 install pyspark-cli

Commands

1. Create a new project: pysparkcli create [project-name]

Run the following code to create your project sample:

pysparkcli create sample -m local[*] -c 2 -t default

master: master is the URL of the cluster it connects to. You can also use -m instead of --master.

project_type: project_type is the type of project you want to create like default, streaming, etc. You can also use -t instead of --project_type.

cores: This controls the number of parallel tasks an executor can run. You can also use -c instead of --cores.

You'll see the following in your command line:

Completed building project: sample

2. Run the project by path: pysparkcli run [project-path]

Run the following code to run your project sample:

virtualenv --python=/usr/bin/python3.7 sample.env

source sample.env/bin/activate

pysparkcli run sample

You'll see the following in your command line:

Started running project: sample/

19/11/25 10:37:07 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using

builtin-java classes where applicable

Hello World

3. Initiate the stream: pysparkcli stream [project-path] [stream-file-path].

Run the following code to stream data for project sample using twitter_stream file: pysparkcli stream sample twitter_stream

You'll see the following in your command line: (streaming_project_env) ➜ checking git:(docs_develop) ✗ pysparkcli stream test twitter_stream

Started streaming of project: test

Requirement already satisfied: certifi==2019.11.28 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 1))

Requirement already satisfied: chardet==3.0.4 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 2))

Requirement already satisfied: idna==2.8 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 3))

Requirement already satisfied: oauthlib==3.1.0 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 4))

Requirement already satisfied: py4j==0.10.7 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 5))

Requirement already satisfied: PySocks==1.7.1 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 6))

Requirement already satisfied: pyspark==2.4.4 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 7))

Requirement already satisfied: requests==2.22.0 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 8))

Requirement already satisfied: requests-oauthlib==1.3.0 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 9))

Requirement already satisfied: six==1.13.0 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 10))

Requirement already satisfied: tweepy==3.8.0 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 11))

Requirement already satisfied: urllib3==1.25.7 in ./streaming_project_env/lib/python3.6/site-packages (from -r test/requirements.txt (line 12))

Listening on port: 5555

4. Run the test by path: pysparkcli test [project-path]

Run the following code to run all tests for your project sample: pysparkcli test sample

You'll see the following in your command line:

``` Started running test cases for project: sample 19/12/09 14:02:13 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable /usr/lib/python3.7/socket.py:660: ResourceWarning: unclosed self._sock = None ResourceWarning: Enable tracemalloc to get the object allocation traceback /usr/lib/python3.7/socket.py:660: ResourceWarning: unclosed self._sock = None ResourceWarning: Enable tracemalloc to get the object allocation traceback .

Ran 1 test in 6.041s```

OK

Check Version: pysparkcli version.

Conclusion

Even though PySpark CLI can create and manage projects, there are more possibilities to be explored. The following are a few that we think would help the project at the current stage:

- Custom integration for different databases during the project creation itself.

- Instead of providing project details as arguments, give a file-based input, like a Yaml file or JSON file.

- Generate dynamic tests for projects.

Learning is a never-ending process and, with PySpark CLI, we hope that it helps you to start that project that you always wanted but never did.

Do you have some interesting features to suggest or implement? Please do raise them here. Also, check out our contribution guidelines.