Enterprises wrestle with a dilemma at the start of every cloud journey. Should they opt for a cloud-native, cloud-agnostic, or cloud-portable approach?

The idea of committing to a single service provider makes them wary. What if they are stuck with the same service provider forever? The fear of vendor lock-in is so real that many opt for cloud-agnostic services.

But a cloud-agnostic approach means maintaining self-managed clusters—a logistical nightmare of its own!

To our enterprise client who leaned toward cloud-agnostic, we suggested a cloud-portable approach, which offers the best of both worlds.

Why Our Client Chose Cloud-Agnostic Initially

The question of which cloud strategy to adopt came up for our client as we were about to build a platform to handle large-volume data (~6M requests in 20 minutes) and complex business logic for tracking and reporting. Our client, who owns multiple subbrands, wanted to implement it across different infrastructures, each with its preferred cloud provider. Some subbrands preferred Azure while others opted for GCP.

The client suggested a cloud-agnostic approach since it would allow them to seamlessly transition between various cloud environments based on management decisions, cost-effectiveness, performance metrics, etc. They would also be able to operate without constraints imposed by proprietary services, meaning they would be able to enjoy more flexibility and freedom in their cloud strategy.

The Downside of the Cloud-Agnostic Approach

The cloud-agnostic approach has gained popularity in recent years, primarily because it allows businesses to choose from multiple cloud providers. Enterprises can keep their negotiation power intact and receive the best possible pricing and service agreements.

While this seems logical and advantageous, the approach has a notable downside. Going cloud-agnostic means sacrificing the benefits of cloud-managed native services in favor of maintaining self-managed clusters.

Going cloud-agnostic means sacrificing the benefits of cloud-managed native services in favor of maintaining self-managed clusters.

In the case of the high ingress data processing scenario discussed above, this translates to handling vast Kafka clusters, serverless functions, Cassandra databases, and their complex scaling requirements.

Managing and maintaining these components can quickly turn into a maintenance headache. Not only does it demand significant technical expertise, but it also diverts valuable time and resources away from the core business objectives.

Our Suggestion: Cloud-Portable Approach—The Best of Both Worlds

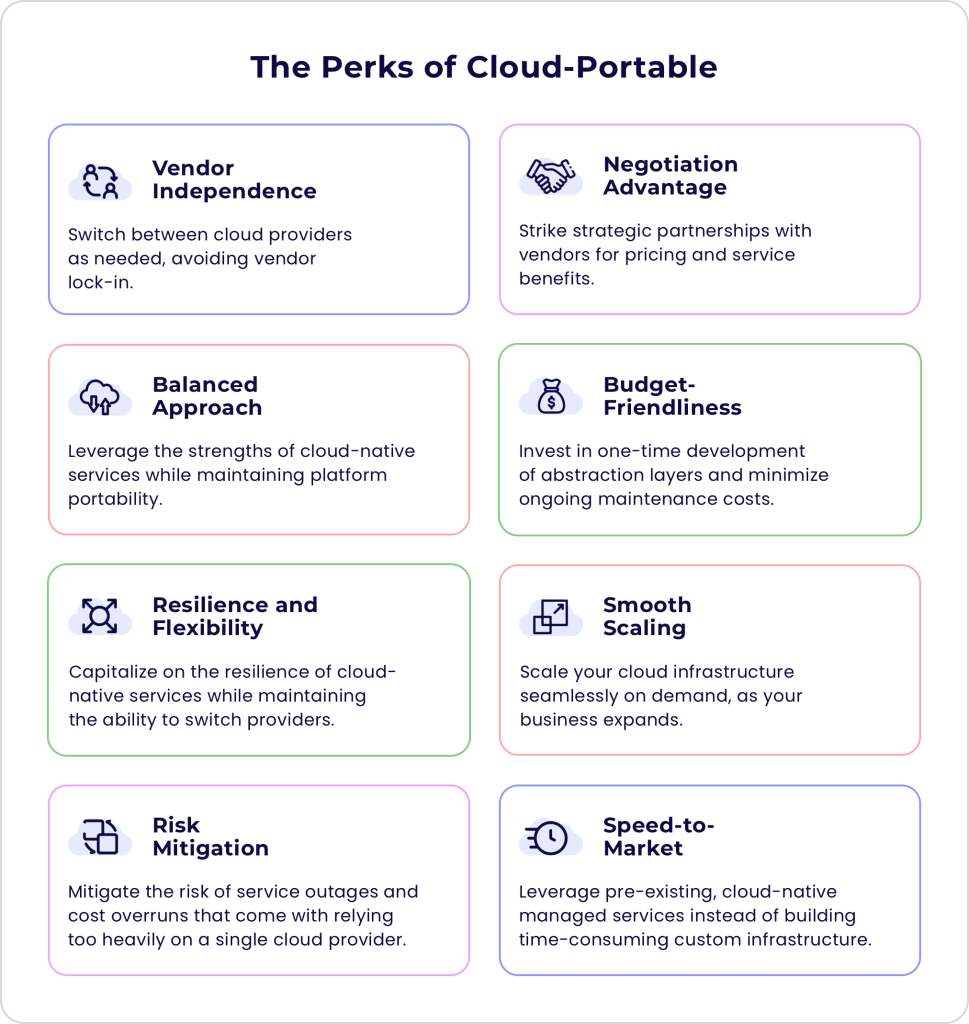

The cloud-portable approach aims to strike a balance between the benefits of cloud-agnostic and cloud-native managed services. It allows businesses to leverage the power of cloud-native services while keeping the entire platform portable across different cloud providers. Consequently, the platform can take advantage of fully managed serverless services without the burden of managing complex infrastructure independently.

Abstractions and How They Work

At the heart of the cloud-portable approach are abstractions over cloud services. The core business logic relies on custom abstractions instead of directly depending on a specific cloud provider's Software Development Kit (SDK).

At the heart of the cloud-portable approach are abstractions over cloud services.

Let's consider a practical scenario involving a wide-column or key-value store. The design should ensure easy portability to Azure or GCP. In Azure implementation, we have Table Storage while in GCP implementation, we use BigTable. If the core business logic/code is tightly coupled with a particular cloud provider's SDK, it creates a maintenance headache as we end up with two different code bases to maintain and deploy. Furthermore, any changes made to the core code would require a QA cycle for both implementations.

However, the cloud-portable approach takes a different route. We create custom private packages (for example, npm package for NodeJs or NuGet package for .NET) that contain a generic abstraction (such as IColumnStore) and the concrete implementations for each cloud provider (Azure TableStorage and GCP BigTable).

The core business code will solely rely on the generic abstraction (IColumnStore) while the concrete implementation will be injected as a dependency (using the Dependency Injection Principle) into the business layer. The choice between Azure TableStorage and GCP BigTable will be driven by the application configuration. (What does the implementation look like? Jump to Part 2 for a detailed walkthrough.)

There are also awesome libraries like MassTransit, ActiveMQ, ServerLess Framework, and ORMs that can support portability without writing custom implementations.

This approach empowers businesses to leverage cloud-native managed services specific to each provider while ensuring overall platform portability. With this level of flexibility, they can effortlessly switch between cloud providers without extensive modifications to their applications, truly embracing the potential of the cloud-portable approach. Enterprises can focus on their core business objectives without being bogged down by the maintenance and scaling of extensive cloud infrastructure.

The cloud-portable approach empowers businesses to leverage cloud-native managed services specific to each provider while ensuring overall platform portability.

Cloud-Portable Is More Cost-Efficient

Developing the abstraction layer and baking it into the core framework in the cloud-portable approach is a one-time cost, whereas the cost of managing complex infrastructure in the cloud-agnostic approach is a recurring one. Thus, the cloud-portable approach presents a balanced and pragmatic way forward for businesses seeking the best of both worlds.

Our Cloud-Portable Solution

The solution we built for our client boasts of a fully serverless architecture, leveraging the power of cloud-managed services like Azure EventHubs / GCP PubSub, Azure Functions / GCP Cloud Functions, Azure TableStorage / GCP BigTable, etc. to effectively manage high data ingestion and perform complex computations as required by the business. The brilliance of this approach lies in its cost efficiency and low maintenance, making it a powerful and sustainable system for our client's needs.

Jump to Part 2 to know more about the actual implementation, techniques used, and how we achieved portability between Azure and GCP.