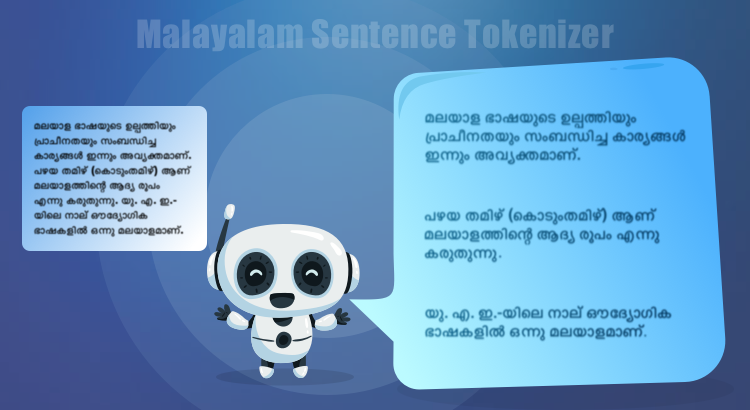

Imagine that you are working on machine translation or a similar Natural Language Processing (NLP) problem. Can you process the corpus as a whole? No. You will have to break it into sentences first and then into words. This process of splitting input corpus into smaller subunits is known as tokenization. The resulting units are tokens. For instance, when paragraphs are split into sentences, each sentence is a token. This is a fairly straightforward process in English but not so in Malayalam (and some other Indic languages).

(more…)